Large language models (LLMs), AI systems that can process human language and generate new texts in response to written prompts, are now widely used by people worldwide to complete various tasks. These systems can be used to quickly create various texts for specific purposes, including work emails, reports, lists, articles and essays, as well as poems, stories, scripts and even song lyrics.

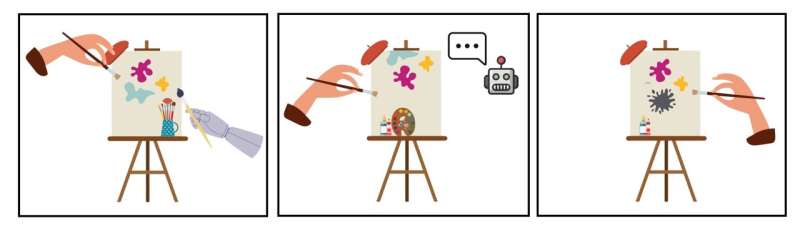

Some people use LLMs as a source of inspiration or ideas, which they then adapt in their own words, while others might choose to directly use AI-generated texts without changing them. Despite their overall effectiveness as creative tools, their actual impact on human creativity is still poorly understood.

Researchers at the University of Toronto recently set out to explore how the use of LLMs during creative tasks affects the creativity of human users. Their findings, published on the arXiv preprint server, suggest that these models can impair the ability of humans to think creatively, resulting in less varied and innovative ideas.

“Generative AI tools, such as ChatGPT, are increasingly being used for creative tasks, from writing emails to brainstorming ideas,” Harsh Kumar, co-author of the paper, told Tech Xplore.

“However, there are growing concerns, which have not yet received sufficient attention, about the long-term impact of these tools on human creativity. We hypothesized that the repeated use of large language models (LLMs) might be impairing our ability to think creatively on our own, despite enhancing performance while the tool is in use—similar to the temporary boost provided by performance-enhancing steroids in sports.”

The key goal of the recent study by Kumar and his colleagues was to measure the residual effects of LLM usage on the creativity of human users in an experimental setting. To do this, they conducted two experiments that focused on different aspects of human creativity, known as divergent and convergent thinking.

“The tasks for each experiment were adapted from established psychology literature,” explained Kumar. “In the divergent thinking experiment, participants were asked to generate alternative uses for a given object in each round, while in the convergent thinking experiment, they were presented with three words and tasked with finding a fourth word to connect them (for example, ‘book’ connects ‘shelf,’ ‘log,’ and ‘worm’).”

Both the first and second experiment were divided into two distinct phases: an exposure phase and a test phase. During the exposure phase, participants in the experimental condition received responses to specific questions using GPT-4o, the widely used conversational LLM developed by OpenAI.

The responses received by GPT-4o were specific to the task at hand. For the divergent thinking task, GPT-4o provided relevant lists of ideas, while for the convergent thinking task it generated a word that would connect the three words provided to the participant.

“We also explored the potential of the model to serve as a coach by providing a structured framework for thinking rather than directly offering solutions,” said Kumar. “In the control condition, participants had no access to LLM assistance. During the test phase, all participants completed the tasks independently, enabling us to measure the residual effects of LLM use during the exposure rounds.”

The researchers found that across both experiments, the use of GPT-4o had improved the performance of participants during the exposure phase. Interestingly, however, participants who did not initially use GPT-4o were found to outperform participants who had access to the model during the test phase.

“This suggests that designers of LLM-based creativity tools, as well as developers of LLMs, should consider not only the immediate benefits of LLM use but also the potential long-term impact on users’ cognitive abilities,” said Kumar. “Otherwise, these tools may inadvertently contribute to cognitive decline over time. We also observed, consistent with existing literature, that using LLM-generated ideas during exposure can lead to a homogenization of ideas within a group.”

The researchers were also surprised to find that the previously reported homogenization effect (i.e., the reported tendency of LLM users to come up with less diverse ideas over time) persisted even after participants had stopped using GPT-4o. This persistent effect was found to be particularly accentuated when the model offered them a general structured framework designed to guide their thinking during the exposure phase of the experiments.

Overall, the findings collected by Kumar and his colleagues offer some valuable insight that could guide future efforts aimed at developing LLMs and AI-powered creative tools. The team has so far conducted its experiments in a controlled laboratory setting, where participants were only exposed to GPT-4o for a limited time, yet they soon plan to carry out additional studies in more realistic scenarios.

“Real-world contexts are more complex and involve prolonged exposure periods,” added Kumar. “To address this, we plan to conduct field experiments with more natural tasks, such as writing a creative story.

“Additionally, we believe that the issue of idea homogenization is significant and carries long-term negative implications for culture and society. As a next step, we intend to explore the design of LLM agents that can mitigate the homogenization of ideas.”

More information:

Harsh Kumar et al, Human Creativity in the Age of LLMs: Randomized Experiments on Divergent and Convergent Thinking, arXiv (2024). DOI: 10.48550/arxiv.2410.03703

© 2024 Science X Network

Citation:

Study explores the impact of LLMs on human creativity (2024, October 30)

retrieved 30 October 2024

from https://techxplore.com/news/2024-10-explores-impact-llms-human-creativity.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.