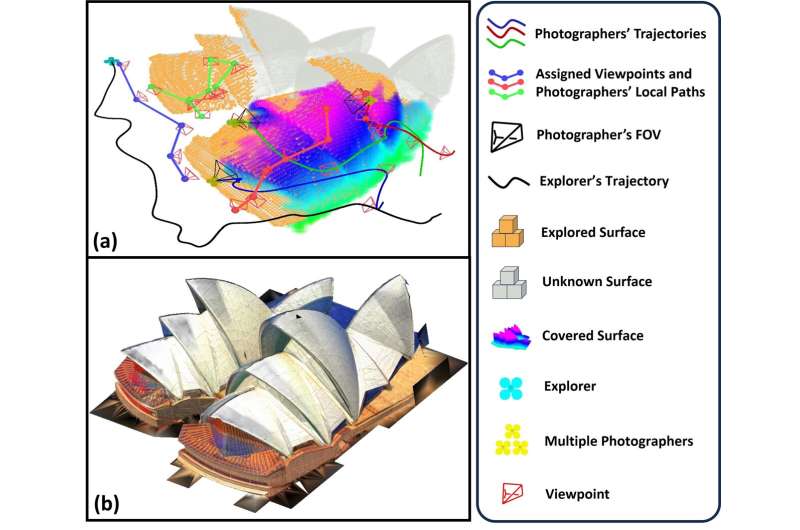

(b) 3D reconstruction result of the above scene produced by the proposed

framework. Credit: arXiv (2024). DOI: 10.48550/arxiv.2409.02738

Unmanned aerial vehicles (UAVs), commonly known as drones, have proved to be highly effective systems for monitoring and exploring environments. These autonomous flying robots could also be used to create detailed maps and three-dimensional (3D) visualizations of real-world environments.

Researchers at Sun Yat-Sen University and the Hong Kong University of Science and Technology recently introduced SOAR, a system that allows a team of UAVs to rapidly and autonomously reconstruct environments by simultaneously exploring and photographing them. This system, introduced in a paper published on the arXiv preprint server and set to be presented at the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 2024, could have numerous applications, ranging from the urban planning to the design of videogame environments.

“Our paper stemmed from the increasing need for efficient and high-quality 3D reconstruction using UAVs,” Mingjie Zhang, co-author of the paper, told Tech Xplore.

“We observed that existing methods often fell into two categories: model-based approaches, which can be time-consuming and expensive due to their reliance on prior information, and model-free methods, which explore and reconstruct simultaneously but might be limited by local planning constraints. Our goal was to bridge this gap by developing a system that could leverage the strengths of both approaches.”

The primary objective of the recent study by Zhang and his colleagues was to create a heterogeneous multi-UAV system that could simultaneously explore environments and collect photographs, collecting data that could be used to reconstruct environments. To do this, they first set out to develop a technique for incremental viewpoint generation that adapts to scene information that is acquired over time.

In addition, the team planned to develop a task assignment strategy that would optimize the efficiency of the multi-UAV team, ensuring that it consistently collected the data necessary to reconstruct environments. Finally, the team ran a series of simulations to assess the effectiveness of their proposed system.

“SOAR is a LiDAR-Visual heterogeneous multi-UAV system designed for rapid autonomous 3D reconstruction,” explained Zhang. “It employs a team of UAVs: one explorer equipped with LiDAR for fast scene exploration and multiple photographers with cameras for capturing detailed images.”

To create 3D reconstructions, the team’s proposed system completes various steps. Firstly, a UAV that they refer to as the “explorer” efficiently navigates and maps an environment employing a surface frontier-based strategy.

As this UAV gradually maps the environment, the team’s system incrementally generates viewpoints that would collectively enable the full coverage of surfaces in the delineated environment. Other UAVs, referred to as photographers, will then visit these sites and collect visual data there.

“The viewpoints are clustered and assigned to photographers using the Consistent-MDMTSP method, balancing workload and maintaining task consistency,” said Zhang. “Each photographer plans an optimal path to capture images from the assigned viewpoints. The collected images and their corresponding poses are then used to generate a textured 3D model.”

A unique feature of SOAR is that it enables data collection by both LiDAR and visual sensors. This ensures the efficient exploration of environments and the production of high-quality reconstructions.

“Our system adapts to the dynamically changing scene information, ensuring optimal coverage with minimal viewpoints,” said Zhang. “By consistently assigning tasks to UAVs, it also improves scanning efficiency and reduces unnecessary detours for photographers.”

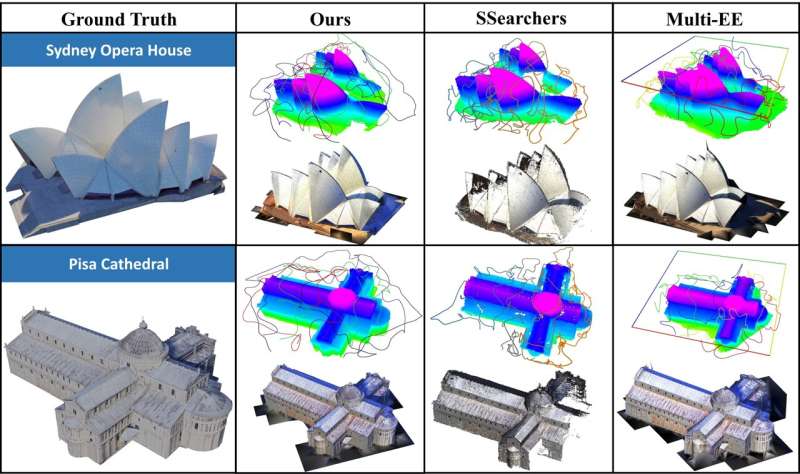

Zhang and his colleagues evaluated their proposed system in a series of simulations. Their findings were highly promising, as SOAR was found to outperform other state-of-the-art methods for environment reconstruction.

“A key achievement of our study is the introduction of a novel framework for fast autonomous aerial reconstruction,” said Zhang. “Central to this framework is the development of several key algorithms that employ an incremental design, striking a crucial balance between real-time planning capabilities and overall efficiency, which is essential for online and dynamic reconstruction tasks.”

In the future, SOAR could be used to tackle a wide range of real-world problems that require the fast and accurate reconstruction of 3D environments. For instance, it could be used to create detailed 3D models of cities and infrastructure or help historians preserve a country’s cultural heritage, helping them reconstruct historic sites and artifacts.

“SOAR could also be used for disaster response and assessment,” said Zhang. “Specifically, it could allow responders to rapidly assess damage after natural disasters and plan rescue and recovery efforts.”

The team’s system could additionally contribute to the inspection of infrastructure and construction sites, allowing workers to map these locations clearly. Finally, it could be used to create 3D models of video game environments inspired by real cities and natural landscapes.

“We are enthusiastic about the potential for future research in this area,” said Zhang. “Our plans include bridging the Sim-to-Real Gap: We aim to tackle the challenges associated with transitioning SOAR from simulation to real-world environments. This will involve addressing issues like localization errors and communication disruptions that can occur in real-world deployments.”

As part of their next studies, the researchers plan to develop new task allocation strategies that could further improve the coordination between different UAVs and the speed at which they map environments. Finally, they plan to add scene prediction and information processing modules to their system, as this could allow it to anticipate the structure of a given environment, further speeding up the reconstruction process.

“We will also explore the implementation of active reconstruction techniques, where the system receives real-time feedback during the reconstruction process,” added Zhang.

“This will allow SOAR to adapt its planning on-the-fly and achieve even better results. Moreover, we will investigate incorporating factors like camera angle and image quality directly into the planning process, which will ensure that the captured images are optimized for generating high-quality 3D reconstructions. These research directions represent exciting opportunities to advance the capabilities of SOAR and push the boundaries of autonomous 3D reconstruction using UAVs.”

More information:

Mingjie Zhang et al, SOAR: Simultaneous Exploration and Photographing with Heterogeneous UAVs for Fast Autonomous Reconstruction, arXiv (2024). DOI: 10.48550/arxiv.2409.02738

© 2024 Science X Network

Citation:

LiDAR-based system allows unmanned aerial vehicle team to rapidly reconstruct environments (2024, September 27)

retrieved 27 September 2024

from https://techxplore.com/news/2024-09-lidar-based-unmanned-aerial-vehicle.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.