How Can AI Propel Exploration of the Cosmos, the Oceans, and the Earth?

Perspectives from professors Katie Bouman, Matthew Graham, John Dabiri, and Zachary Ross

Image credit: Caltech

BLACK HOLES

Computational imaging expert Katie Bouman describes how machine learning enables imaging of black holes and other astrophysical phenomena.

We use machine learning to produce images from data gathered by the Event Horizon Telescope (EHT), a network of several telescopes located around the world that work in sync to collect the light from a black hole simultaneously. Together, these telescopes function as one giant virtual telescope. We join their data together computationally and develop algorithms to fill in the gaps in coverage. Because of the holes in our virtual telescope’s coverage, there is a lot of uncertainty introduced in forming the picture. Characterizing this uncertainty, or quantifying the range of possible appearances the black hole could take on, took the worldwide team months for the M87* black hole image and literally years for the more recent picture of the Sgr A* black hole in the center of our Milky Way.

We can use the image-generating power of machine learning methods, called deep-learning generative models, to more efficiently capture the uncertainty for the EHT images. The models we are developing quickly generate a whole distribution of possible images that fit the complicated data we collect, not just a single image. We also have been using these same generative models to evaluate uncertainty for exoplanet orbits, medical imaging, and seismology.

One area where we are excited about using machine learning is in helping to optimize sensors for computational imaging. For instance, we are currently developing machine learning methods to help identify locations for new telescopes that we build into the EHT. By designing the telescope placement and the image-reconstruction software simultaneously, we can squeeze more information out of the data we collect, recovering higher-fidelity imagery with less uncertainty. This idea of co-designing computational imaging systems also extends far beyond the EHT into medical imaging and other domains.

Caltech astronomy professors Gregg Hallinan and Vikram Ravi are leading an effort called the DSA-2000, in which 2,000 telescopes in Nevada will image the entire sky in radio wavelengths. Unlike the EHT, where we have to fill in gaps in the data, this project will collect a huge amount of data: about 5 terabytes per second. All the processing steps, like correlation, calibration, and imaging, have to be fast and automated. There is no time to do this work using traditional methods. Our group is collaborating to develop deep learning methods that automatically clean up the images so users receive images within minutes of collecting the data.

—Katherine Bouman, assistant professor of computing and mathematical sciences, electrical engineering and astronomy; Rosenberg Scholar; and Heritage Medical Research Institute Investigator

ASTRONOMY

Astronomer Matthew Graham, the project scientist for the Zwicky Transient Facility, explains how AI is changing astrophysics.

The sociological change that we’ve seen in astronomy in the last 20 years has to do with big data. The data are too complicated, too large, and sometimes coming in from the telescopes too fast as well, streaming off the mountain at gigabytes a second. Therefore, we look to machine learning.

A lot of people like these new big data sets. Let’s say that you’re looking for a one-in-a-million object. Mathematically, in a data set that has a million objects, you’re going to find one object. In a data set like the Rubin Observatory’s Legacy Survey of Space and Time, which is going to have 40 billion objects in it, you’re going to find 40,000 of those one-in-a-million objects.

I’m interested in active galactic nuclei, where you have a supermassive black hole in the middle of a galaxy, and surrounding the black hole is a disk of dust and gas that falls into it and makes it super bright. So, I can go through a data set to try to find those sorts of things. I have an idea of what pattern I should look for, so I figure out the machine learning approach I want to develop to do that. Or I could simulate what these things should look like, then train up my algorithm so that it can find simulated objects like them and then apply it to real data.

Today, we’re using computers for the repetitive work that previously undergraduates or grad students would do. We are moving, however, into areas where the machine learning is becoming more sophisticated, when we start saying to the computers, “Tell me what patterns you’re finding in here.”

—Matthew Graham, research professor of astronomy

OCEAN

Engineer John Dabiri describes how AI enhances ocean monitoring and exploration.

Only 5 to 10 percent of the volume of the ocean has been explored. Traditional ship-based measurements are expensive. Increasingly, scientists and engineers use underwater robots to survey the ocean floor, look at interesting places or objects, and study and monitor ocean chemistry, ecology, and dynamics.

Our group develops technology for swarms of tiny self-contained underwater drones and bionic jellyfish to enable exploration.

The drones might encounter complex currents as they navigate the ocean, and fighting them wastes energy or pulls the drones off course. Instead, we want these robots to take advantage of the currents, much like hawks soar on thermals in the air to reach great heights.

However, in the complex ocean currents, we can’t calculate and control each robot’s trajectory like we would for a spacecraft.

When we want robots to explore the deep ocean, especially in swarms, it’s almost impossible to control them with a joystick from 20,000 feet away at the surface. We also can’t feed them data about the local ocean currents they need to navigate because we can’t detect the robots from the surface. Instead, at a certain point we need ocean-borne drones to be able to make decisions about how to move for themselves.

To help the drones navigate independently, we are endowing them with AI, specifically deep reinforcement learning networks written onto low-power microcontrollers that are only about a square inch in size. Using data from the drones’ gyroscopes and accelerometers, the AI repeatedly calculates trajectories. With every try, it learns how to help the drone efficiently coast and pulse its way through any currents it encounters.

—John Dabiri (MS ’03, PhD ’05), Centennial Professor of Aeronautics and Mechanical Engineering

EARTHQUAKES

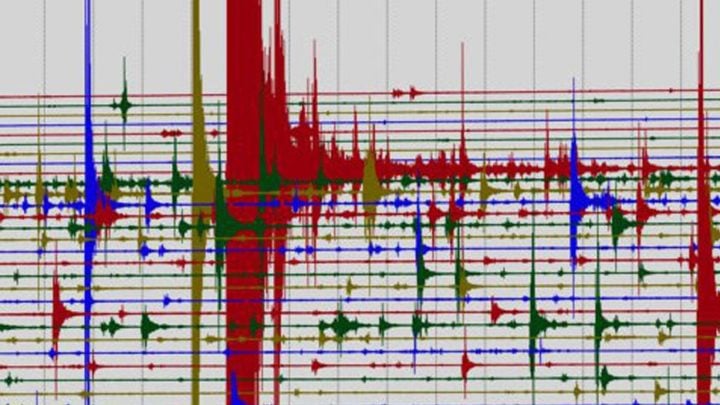

Seismologist Zachary Ross explains how machine learning helps with earthquake monitoring.

The word “earthquake” makes you think of the largest moment of shaking. But tremors precede and follow that moment. To understand the whole process, you have to analyze all the earthquake signals to see the tremors’ collective behavior. The more tremors and earthquakes you look at, the more they illuminate the web of fault structures inside the earth that are responsible for earthquakes.

Monitoring earthquake signals is challenging. Seismologists use more data than the public sees from the Southern California Seismic Network. It’s too much for us to keep up with manually. And we have no easy way to distinguish earthquake tremors from nuisance signals, such as those from loud events or big trucks.

Students in the Seismo Lab used to have to spend substantial time measuring seismic wave properties. You get the hang of it after five minutes, and it’s not fun. These repetitive tasks are barriers to real scientific analysis. Our students would rather spend their time thinking about what’s new.

Now, AI helps us recognize the signals that we’re interested in. First, we train machine learning algorithms to detect different types of signals in data that have been carefully hand annotated. Then we apply our model to the new incoming data. The model makes decisions about as well as seismologists can.

—Zachary Ross, assistant professor of geophysics and William H. Hurt Scholar

Source: Caltech