Separate developments in speech recognition technology from IBM and California universities at San Francisco and Berkeley offer promising news for patients suffering from vocal paralysis and speech loss.

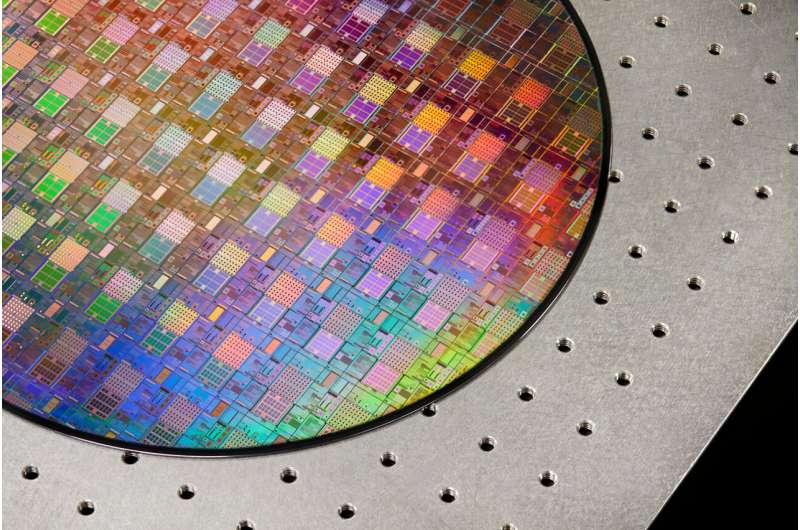

IBM reported the creation of a faster and more energy-efficient computer chip capable of turbo-charging speech-recognition model output.

With the explosive growth of large language models for AI projects, limitations of hardware performance leading to lengthier training periods and spiraling energy consumption have come to light.

In terms of energy expenditure, MIT Technology Review recently reported that training a single AI model generates more than 626,000 pounds of carbon dioxide, almost five times the amount an average American car emits in its lifetime.

A key factor behind the huge energy drain of AI operations is the exchanging of data back and forth between memory and processors.

IBM researchers seeking a solution say their prototype incorporates phase-change memory devices within the chip, optimizing fundamental AI processes known as multi-accumulate (MAC) operations that greatly speed up chip activity. This bypasses the standard time- and energy-consuming routine of transporting data between memory and processor.

“These are, to our knowledge, the first demonstrations of commercially relevant accuracy levels on a commercially relevant model,” said IBM’s Stefano Ambrogia in a study published Aug. 23 in the online Nature journal.

“Our work indicates that, when combined with time-, area- and energy-efficient implementation of the on-chip auxiliary compute, the high energy efficiency and throughput delivered … can be extended to an entire analog-AI system,” he said.

In processor-intensive speech recognition operations, IBM’s prototype achieved 12.4 trillion operations per second per watt, an efficiency level up to hundreds of times better than the most powerful CPUs and GPUs currently in use.

Meanwhile, researchers at UC San Francisco and UC Berkeley say they devised a brain-computer interface for people who lost the ability to speak that generates words from a user’s thoughts and efforts at vocalization.

Edward Chang, chair of neurological surgery at UC San Francisco, said, “Our goal is to restore a full, embodied way of communicating, which is the most natural way for us to talk with others.”

Chang and his team implanted two tiny sensors on the surface of the brain of a woman suffering from amyotrophic lateral sclerosis, a neurogenerative disease that gradually robs its victims of mobility and speech.

Although the subject could still utter sounds, ALS restricted the use of her lips, tongue and larynx to articulate coherent words.

The sensors were connected through a brain-computer interface to banks of computers housing language-decoding software.

The woman went through 25 training sessions lasting four hours each in which she read sets of between 260 and 480 sentences. Her brain activity during readings was translated by the decoder, which detected phonemes and assembled them into words.

Researchers then synthesized her speech, based on a recording of her speaking at a wedding years earlier, and designed an avatar that reflected her facial movements.

The results were promising.

After four months of training, the model was able to track the subject’s attempted vocalizations and convert them into intelligible words.

When based on training vocabulary of 125,000 words, which covered virtually anything the subject would want to say, the accuracy rate was 76%.

When the vocabulary was limited to 50 words, the translation system did much better, correctly identifying her speech 90% of the time.

Furthermore, the system was able to translate the subject’s speech at a rate of 62 words per minute. Although triple the rate of word-recognition from earlier similar experiments, researchers realize improvements will be needed to meet the 160-word-per-minute rate of natural speech.

“This is a scientific proof of concept, not an actual device people can use in everyday life,” said Frank Willett, co-author of the study posted Aug. 23 in Nature. “But it’s a big advance toward restoring rapid communication to people with paralysis who can’t speak.”

More information:

S. Ambrogio et al, An analog-AI chip for energy-efficient speech recognition and transcription, Nature (2023). DOI: 10.1038/s41586-023-06337-5

Hechen Wang, Analogue chip paves the way for sustainable AI, Nature (2023). DOI: 10.1038/d41586-023-02569-7

© 2023 Science X Network

Citation:

Advances in AI, chips boost voice recognition (2023, August 28)

retrieved 28 August 2023

from https://techxplore.com/news/2023-08-advances-ai-chips-boost-voice.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.