People with voice disorders, including those with pathological vocal cord conditions or who are recovering from laryngeal cancer surgeries, can often find it difficult or impossible to speak. That may soon change.

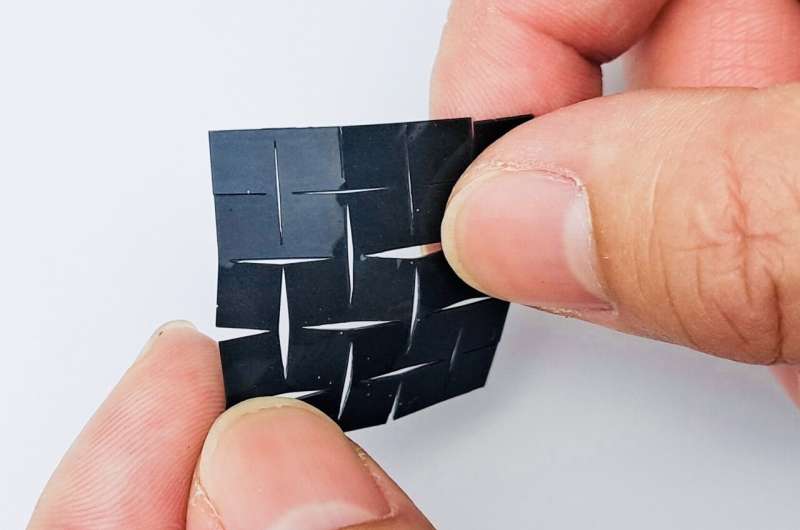

A team of UCLA engineers has invented a soft, thin, stretchy device measuring just over 1 square inch that can be attached to the skin outside the throat to help people with dysfunctional vocal cords regain their voice function. Their advance is detailed this week in the journal Nature Communications.

The new bioelectric system, developed by Jun Chen, an assistant professor of bioengineering at the UCLA Samueli School of Engineering, and his colleagues, is able to detect movement in a person’s larynx muscles and translate those signals into audible speech with the assistance of machine-learning technology—with nearly 95% accuracy.

The breakthrough is the latest in Chen’s efforts to help those with disabilities. His team previously developed a wearable glove capable of translating American Sign Language into English speech in real time to help users of ASL communicate with those who don’t know how to sign.

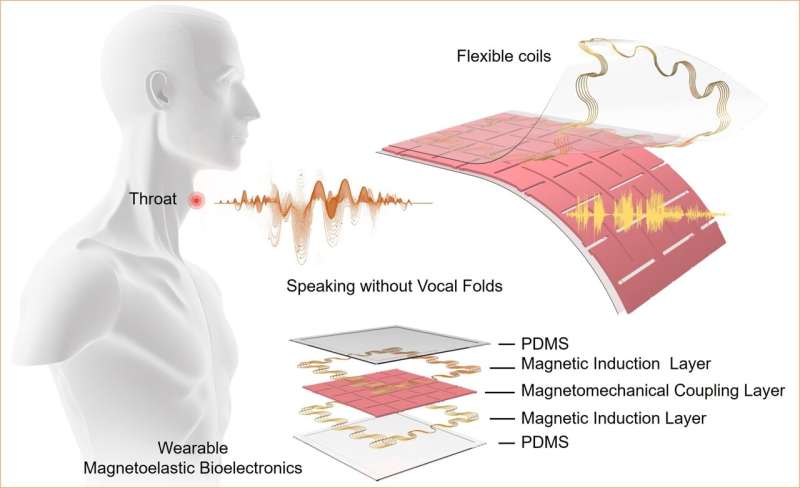

The tiny new patch-like device is made up of two components. One, a self-powered sensing component, detects and converts signals generated by muscle movements into high-fidelity, analyzable electrical signals; these electrical signals are then translated into speech signals using a machine-learning algorithm. The other, an actuation component, turns those speech signals into the desired voice expression.

The two components each contain two layers: a layer of biocompatible silicone compound polydimethylsiloxane, or PDMS, with elastic properties, and a magnetic induction layer made of copper induction coils. Sandwiched between the two components is a fifth layer containing PDMS mixed with micromagnets, which generates a magnetic field.

Utilizing a soft magnetoelastic sensing mechanism developed by Chen’s team in 2021, the device is capable of detecting changes in the magnetic field when it is altered as a result of mechanical forces—in this case, the movement of laryngeal muscles. The embedded serpentine induction coils in the magnetoelastic layers help generate high-fidelity electrical signals for sensing purposes.

Measuring 1.2 inches on each side, the device weighs about 7 grams and is just 0.06 inch thick. With double-sided biocompatible tape, it can easily adhere to an individual’s throat near the location of the vocal cords and can be reused by reapplying tape as needed.

Voice disorders are prevalent across all ages and demographic groups; research has shown that nearly 30% of people will experience at least one such disorder in their lifetime. Yet with therapeutic approaches, such as surgical interventions and voice therapy, voice recovery can stretch from three months to a year, with some invasive techniques requiring a significant period of mandatory postoperative voice rest.

“Existing solutions such as handheld electro-larynx devices and tracheoesophageal- puncture procedures can be inconvenient, invasive or uncomfortable,” said Chen who leads the Wearable Bioelectronics Research Group at UCLA, and has been named one the world’s most highly cited researchers five years in a row. “This new device presents a wearable, non-invasive option capable of assisting patients in communicating during the period before treatment and during the post-treatment recovery period for voice disorders.”

How machine learning enables the wearable tech

In their experiments, the researchers tested the wearable technology on eight healthy adults. They collected data on laryngeal muscle movement and used a machine-learning algorithm to correlate the resulting signals to certain words. They then selected a corresponding output voice signal through the device’s actuation component.

The research team demonstrated the system’s accuracy by having the participants pronounce five sentences—both aloud and voicelessly—including “Hi, Rachel, how are you doing today?” and “I love you!”

The overall prediction accuracy of the model was 94.68%, with the participants’ voice signal amplified by the actuation component, demonstrating that the sensing mechanism recognized their laryngeal movement signal and matched the corresponding sentence the participants wished to say.

Going forward, the research team plans to continue enlarging the vocabulary of the device through machine learning and to test it in people with speech disorders.

Other authors of the paper are UCLA Samueli graduate students Ziyuan Che, Chrystal Duan, Xiao Wan, Jing Xu and Tianqi Zheng—all members of Chen’s lab.

More information:

Ziyuan Che et al, Speaking without vocal folds using a machine-learning-assisted wearable sensing-actuation system, Nature Communications (2024). DOI: 10.1038/s41467-024-45915-7

Citation:

Speaking without vocal cords, thanks to a new AI-assisted wearable device (2024, March 16)

retrieved 16 March 2024

from https://techxplore.com/news/2024-03-vocal-cords-ai-wearable-device.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.